sfuqua wrote:I hope I make sense here, because Chomsky's recent, comments on AI, annoyed the living daylights out of me.

Chomsky recently wrote an editorial for the New York Times, where he seems to think that he has proven that AI cannot think.

Chomsky has been doing the rounds recently, with several interviews a day over the past few month, on linguistics, consciousnes, AI and education.

Now even the experts who understand these language models the best remain unable to reverse engineer a trained model and understand how the particular arrangement of artificial neurons that arose from the training process actually produces the external behaviour that it does. The best experts remain ignorant. Although Chomsky's ignorance is really on another level, and so to support his conclusions, he uses necessarily over-simplistic arguments.

Chomsky claims that what these language models are doing has very little to do with the way language processing works in the brain (while admitting that he knows neither how the brain does it, nor how the AI does it), and he thinks this is easy to prove simply based on the fact that these language models will work equally well on impossible languages as they do on real languages. By "impossible languages", he presumably means languages that don't have a consistent grammar. Here, the neural network will still manage to find the patterns and be able to model that even though there is no consistent grammar. My response would be that "real language" only follows consistent rules to a point, and then you end up with tonnes of exceptions that don't follow the strict rules. Within these exceptions, there are still patterns to be found in the "real language", and they are not going to follow strict Chomskyan grammatical rules. You need something more powerful than grammar to be able to model these patterns. The fact that grammar doesn't do it, but both artificial AND real neural networks can model these patterns suggest to me that the latter two have far more in common than anything Chomsky has proposed to model what's going on in language processing.

Obviously, no AI expert suggests that we've cracked the Broca's and Wernicke's areas or anything like that. If there is any sort of differentiation between the types of neurons found in different regions of the brain, AI is not really at a stage where it is replicating that. Basically, the very same artificial neurons are infinitely adaptable to infinitely many tasks, be that language, vision, etc. To some extent, this is also the case in the human brain where after brain damage in one area, it sometimes happens that another part of the brain adapts and takes over, but if there is anything advantageous about the differentiation of neurons in different parts of the brain, that is something AI has yet to replicate. But at the same time, the fact that neurons can adapt to different tasks is another fact renders Chomsky's argument above useless, because even if he were correct that language models work equally well on what he characterises as "impossible languages", that could simply be analogous to neurons showing their adaptability to a new task.

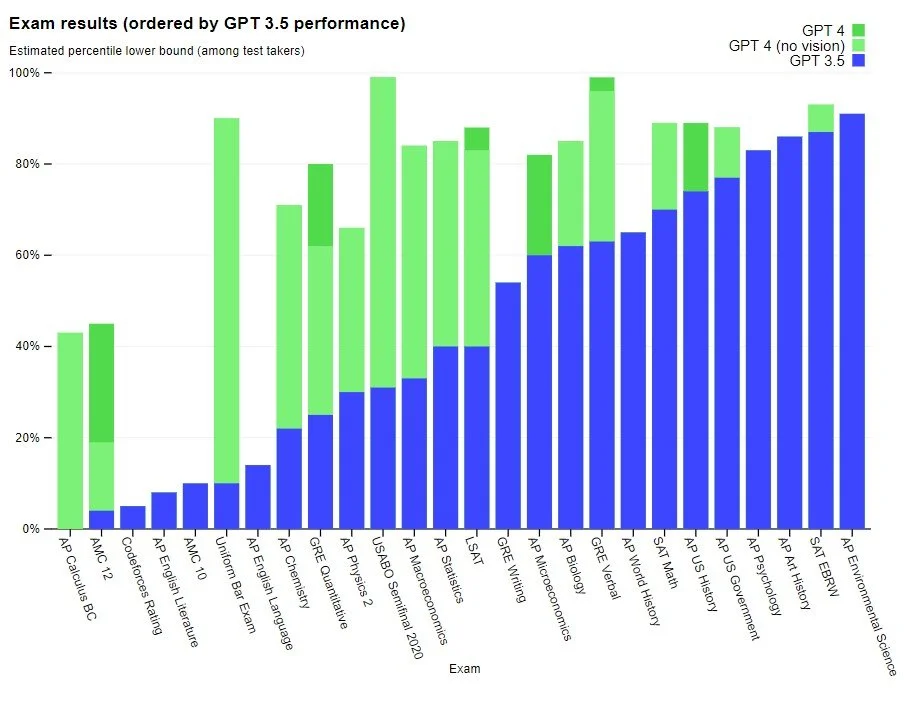

In his recent interviews, he also presents the view that ChatGPT is nothing more than a sophisticated plagiarism device, and has little application outside of that. I think this mainly shows a lack of imagination. ChatGPT, and now the newly released GPT 4, have plenty of novel applications beyond the obvious use case of chatting and content generation. As it turns out, these models happen to outperform the previous state of the art on a whole range of Natural Language Processing tasks that lots of applications require, including summarisation, translation, tokenisation, lemmatisation, natural language understanding (e.g. in order to understand the intent behind a search query), and so on.

So let's imagine for example a new web application that uses AI to design a logo for your business based on some requirements, and there's an AI model you've found that works well for that, but it requires a particular kind of prompt that is not terribly easy for a user to input. What you can do now as the app developer is take one of these large language models, such as ChatGPT or GPT 4, and use it to provide a language interface to the user. The language model's purpose is then to "understand" what the user wants, and then translate it into the prompt that will work best for the logo generation model. But there are basically limitless applications of a large language model.

And of course as members of this forum know, many of us have find it quite helpful for language learning. In Japanese, I find it quite helpful to paste in a sentence and ask ChatGPT to tokenise it for me and explain it word by word, including the conjugations. To perform this task, ChatGPT is actually integrating a number of different NLP tasks that have traditionally required a number of separate models to accomplish, so it's quite a feat that ChatGPT can do it all under one model (understanding the question, doing all the tokenisation, lemmatisation, translation, etc. and then producing the explanations). At the same time, it's BECAUSE this is all done in one model that it performs so well at the individual NLP tasks. This is due to the transfer learning effect where learning to be good at one task actually helps the same model to be good at another task.

I find current AIs to be fascinating, not because they are just like people, but because they are an alien intelligence that has recently arrived in the universe. Of course, they are not human. Exactly how they are different is a fascinating question. How similar are they to humans, what can they do better and what are they weak at?

In all of this, we need to remember that these language models are not at all like humans. It's not like ChatGPT went to school as a teenager and had a social life and experiences from its senses, or even any perception of passing time whatsoever. OpenAI took a short cut and trained it to perform well at the tasks it was intended to perform. We don't really know what it would take for an AI to become like a human, sentient and all, but we can at least understand that ChatGPT can't be it because it lacks almost everything that makes up human experience. ChatGPT is good at language, but less good at other things.

Sorry to make this a long post, but ChatGPT said it better than Chomsky.

"What are the differences between how a human brain works and how an artificial neural network works?"

The human brain and artificial neural networks are both capable of processing information, but there are some key differences in how they work:

Structure: The human brain is made up of billions of interconnected neurons, while an artificial neural network is made up of layers of interconnected nodes or artificial neurons.

Learning: The human brain is capable of unsupervised learning, which means it can learn from unstructured data without any external guidance. Artificial neural networks, on the other hand, rely on supervised learning, where they are trained on labeled data and require external feedback to adjust their parameters.

Another example of ChatGPT being good at language, but less good at other things, such as knowledge. From what I've read, Google's LaMDA was trained according to a different methodology that may result it in being more reliable for knowledge, but really I see these language models being useful more as a language interface to other systems, at least in the current generation. Google mentioned in a paper that their intention was to integrate their language model with an external knowledge system, such as Google search itself, so that the language model would primarily be used to understand the question, but then the external knowledge system would be used to find the answer, and then the language model would then be engaged again to communicate the findings back to the user. I believe the new Bing is now adopting the same approach with their integration between GPT 4 and Bing search results.

But back to the made up ChatGPT answer above, no, "unsupervised learning" is also a big thing in AI as well.

Although, if ChatGPT can get it right 80% of the time, and users are aware that it's not 100%, there is still utility there.

Le Baron wrote:Some people seemed to be fooled, even if only temporarily, because it is extremely compelling. The question which asks: 'how are they different from humans?' seems to me misplaced. Nothing in AI even looks like 'thinking'. It isn't 'thinking' it is just presenting things.

Not "human" thinking, but since the advent of computers, computer scientists have been generalising these concepts. Words like "language", "understanding", "thinking" and "intelligence" all have specific meanings in the human sense, but we now also have digital analogues of these (pardon the pun!), and so the concepts have become broader, with the human instantiations being a subcategory.

So When we say that ChatGPT doesn't really "understand", we would mean that in the "human" sense, but there is still a sense in which ChatGPT does "understand". Because in computer science, there is a field of study known as natural language understanding. When you look at the progress that has been made in machine translation, the oldest translation models simply did a literal word-for-word translation without understanding the overall meaning of the sentence, and so it would get things very wrong. What has allowed these translation systems to get better is precisely that they have become better at understanding the overall meaning of the sentence, and even beyond that, the overall context of the sentence within the larger piece of text that it appeared. Or take something even much simpler than that: Before we even started building machine learning models for this sort of stuff, early systems like UNIX came up with a command-line interface that was capable of understanding basic commands. It's not human understanding, but it is a kind of understanding that doesn't include consciousness as a component.

Now to your example of "thinking",

Google's PALM language model has demonstrated capabilities on reasoning in being able to solve never-before-seen logic problems, and being able to explain to you the reasoning steps as to how it arrived at the conclusion. This is not a human thinking thinking up a solution to the problem, but rather a non-sentient computer solving the exact same kind of problem that would typically involve human thinking. That is a kind of thinking, but just not human thinking.

Just in closing, the term "Artificial Intelligence" itself is simply defined as methods that allow a computer to perform tasks that would typically require human intelligence. The goal of taking these previously human-only concepts and then generalising them to include other types of "language", other types of "understanding" etc., is simply to reflect our expanding progress in science. We're at a point now where the computer scientists are borrowing insights from the neuroscientists and the neuroscientists are also borrowing insights from the computer scientists. This is the utility of being able to generalise a concept across two different fields of study.